Bonsoir,

@Integra j’aurais encore besoin d’un peu d’aide pour les détections ^^

(Je ne sais pas s’il ne faudrait pas recréer un sujet… Mais comme c’est lié à ce que j’ai fait ici… @WarC0zes si tu estimes plus cohérent de refaire un autre sujet, voudrais-tu bien scinder ce sujet à partir de ce message ? Merci d’avance).

J’ai de nouvelles caméras, des Annke C800, dont j’ai réussi à configurer les flux.

J’en ai mise deux en tests avant déménagement le mois prochain.

L’une est dans le salon à côté et une dans le bureau.

Sur celle dans le salon pas de soucis, les réglages que j’ai mis initialement pour la Reolink fonctionnent bien. (je mettrais le fichier de configuration en fin de message).

Mais sur celle dans le bureau, je n’ai aucune détection.

Je suppose que c’est parce que la caméra est plus proche de ce qui est filmé et donc que les mêmes objets en mouvement apparaissent plus gros.

Mais du coup, je ne sais pas comment faire pour modifier les valeurs des paramètres de détection pour qu’un humain soit détecté comme tel, et que mon chat comme un chat.

Et bien sûr, j’espère qu’il est possible de définir des paramètres de détection différents sur une caméra que dans la section générale…

Voilà le fichier de configuration :

# yaml-language-server: $schema=http://192.168.2.64:5000/api/config/schema.json

mqtt:

enabled: true

host: 192.168.2.140

port: 1883

user: '{FRIGATE_MQTT_USER}'

password: '{FRIGATE_MQTT_PASSWORD}'

stats_interval: 60

# Optional: Detectors configuration. Defaults to a single CPU detector

detectors:

# Required: name of the detector

coral:

# Required: type of the detector

# Frigate provided types include 'cpu', 'edgetpu', 'openvino' and 'tensorrt' (default: shown below)

# Additional detector types can also be plugged in.

# Detectors may require additional configuration.

# Refer to the Detectors configuration page for more information.

type: edgetpu

device: usb

# Optional: Database configuration

database:

# The path to store the SQLite DB (default: shown below)

path: /config/frigate.db

# Optional: TLS configuration

tls:

# Optional: Enable TLS for port 8971 (default: shown below)

enabled: true

# Optional: Authentication configuration

auth:

# Optional: Enable authentication

enabled: true

# Optional: Reset the admin user password on startup (default: shown below)

# New password is printed in the logs

reset_admin_password: false

# Optional: Cookie to store the JWT token for native auth (default: shown below)

#cookie_name: "frigate_token"

# Optional: Set secure flag on cookie. (default: False)

# NOTE: This should be set to True if you are using TLS

cookie_secure: true

# Optional: Session length in seconds (default: shown below)

session_length: 86400 # 24 hours

# Optional: Refresh time in seconds (default: shown below)

# When the session is going to expire in less time than this setting,

# it will be refreshed back to the session_length.

refresh_time: 43200 # 12 hours

# Optional: Rate limiting for login failures to help prevent brute force

# login attacks (default: None)

# See the docs for more information on valid values

failed_login_rate_limit: 1/second;5/minute;20/hour

# Optional: Trusted proxies for determining IP address to rate limit

# NOTE: This is only used for rate limiting login attempts and does not bypass

# authentication. See the authentication docs for more details.

trusted_proxies:

- 172.18.0.0/16 # <---- this is the subnet for the internal docker compose network

- 192.168.2.141/32 # <---- SWAG Reverse Proxy

# Optional: Number of hashing iterations for user passwords

# As of Feb 2023, OWASP recommends 600000 iterations for PBKDF2-SHA256

# NOTE: changing this value will not automatically update password hashes, you

# will need to change each user password for it to apply

hash_iterations: 600000

ffmpeg:

global_args: -hide_banner -loglevel warning

hwaccel_args: preset-vaapi

output_args:

record: preset-record-generic-audio-copy

birdseye:

enabled: false

snapshots:

# Optional: Enable writing jpg snapshot to /media/frigate/clips (default: shown below)

enabled: true

# Optional: save a clean PNG copy of the snapshot image (default: shown below)

clean_copy: false

# Optional: print a timestamp on the snapshots (default: shown below)

timestamp: false

# Optional: draw bounding box on the snapshots (default: shown below)

bounding_box: true

# Optional: crop the snapshot (default: shown below)

crop: false

# Optional: height to resize the snapshot to (default: original size)

height: 720

# Optional: Restrict snapshots to objects that entered any of the listed zones (default: no required zones)

required_zones: []

# Optional: Camera override for retention settings (default: global values)

retain:

# Required: Default retention days (default: shown below)

default: 4

# Optional: Per object retention days

objects:

person: 4

# Optional: quality of the encoded jpeg, 0-100 (default: shown below)

quality: 100

# Optional: Record configuration

# NOTE: Can be overridden at the camera level

record:

# Optional: Enable recording (default: shown below)

# WARNING: If recording is disabled in the config, turning it on via

# the UI or MQTT later will have no effect.

enabled: true

# Optional: Number of minutes to wait between cleanup runs (default: shown below)

# This can be used to reduce the frequency of deleting recording segments from disk if you want to minimize i/o

expire_interval: 60

# Optional: Sync recordings with disk on startup and once a day (default: shown below).

sync_recordings: false

# Optional: Retention settings for recording

retain:

# Optional: Number of days to retain recordings regardless of events (default: shown below)

# NOTE: This should be set to 0 and retention should be defined in events section below

# if you only want to retain recordings of events.

days: 3 # <- number of days to keep continuous recordings -- mettre 0 pour purger

# Optional: Mode for retention. Available options are: all, motion, and active_objects

# all - save all recording segments regardless of activity

# motion - save all recordings segments with any detected motion

# active_objects - save all recording segments with active/moving objects

# NOTE: this mode only applies when the days setting above is greater than 0

#mode: active_objects

mode: all

export:

# Optional: Timelapse Output Args (default: shown below).

# NOTE: The default args are set to fit 24 hours of recording into 1 hour playback.

# See https://stackoverflow.com/a/58268695 for more info on how these args work.

# As an example: if you wanted to go from 24 hours to 30 minutes that would be going

# from 86400 seconds to 1800 seconds which would be 1800 / 86400 = 0.02.

# The -r (framerate) dictates how smooth the output video is.

# So the args would be -vf setpts=0.02*PTS -r 30 in that case.

timelapse_args: -vf setpts=0.04*PTS -r 30

# Optional: Recording Preview Settings

preview:

# Optional: Quality of recording preview (default: shown below).

# Options are: very_low, low, medium, high, very_high

quality: high

# Optional: Event recording settings

events:

# Optional: Number of seconds before the event to include (default: shown below)

pre_capture: 5

# Optional: Number of seconds after the event to include (default: shown below)

post_capture: 5

# Optional: Objects to save recordings for. (default: all tracked objects)

objects:

- person

# Optional: Retention settings for recordings of events

retain:

# Required: Default retention days (default: shown below)

default: 4 # <- number of days to keep event recordings -- mettre 0 pour purger

# Optional: Mode for retention. (default: shown below)

# all - save all recording segments for events regardless of activity

# motion - save all recordings segments for events with any detected motion

# active_objects - save all recording segments for event with active/moving objects

#

# NOTE: If the retain mode for the camera is more restrictive than the mode configured

# here, the segments will already be gone by the time this mode is applied.

# For example, if the camera retain mode is "motion", the segments without motion are

# never stored, so setting the mode to "all" here won't bring them back.

mode: motion

# Optional: Per object retention days

objects:

person: 4

cat: 4

# Optional: Review configuration

# NOTE: Can be overridden at the camera level

review:

# Optional: alerts configuration

alerts:

# Optional: labels that qualify as an alert (default: shown below)

labels:

- person

# Optional: required zones for an object to be marked as an alert (default: none)

# NOTE: when settings required zones globally, this zone must exist on all cameras

# or the config will be considered invalid. In that case the required_zones

# should be configured at the camera level.

required_zones: []

# Optional: detections configuration

detections:

# Optional: labels that qualify as a detection (default: all labels that are tracked / listened to)

labels:

- cat

# Optional: required zones for an object to be marked as a detection (default: none)

# NOTE: when settings required zones globally, this zone must exist on all cameras

# or the config will be considered invalid. In that case the required_zones

# should be configured at the camera level.

required_zones: []

# Optional: Motion configuration

# NOTE: Can be overridden at the camera level

motion:

# Optional: enables detection for the camera (default: True)

# NOTE: Motion detection is required for object detection,

# setting this to False and leaving detect enabled

# will result in an error on startup.

enabled: true

# Optional: The threshold passed to cv2.threshold to determine if a pixel is different enough to be counted as motion. (default: shown below)

# Increasing this value will make motion detection less sensitive and decreasing it will make motion detection more sensitive.

# The value should be between 1 and 255.

threshold: 20 #30

# Optional: The percentage of the image used to detect lightning or other substantial changes where motion detection

# needs to recalibrate. (default: shown below)

# Increasing this value will make motion detection more likely to consider lightning or ir mode changes as valid motion.

# Decreasing this value will make motion detection more likely to ignore large amounts of motion such as a person approaching

# a doorbell camera.

lightning_threshold: 0.8

# Optional: Minimum size in pixels in the resized motion image that counts as motion (default: shown below)

# Increasing this value will prevent smaller areas of motion from being detected. Decreasing will

# make motion detection more sensitive to smaller moving objects.

# As a rule of thumb:

# - 10 - high sensitivity

# - 30 - medium sensitivity

# - 50 - low sensitivity

contour_area: 10

# Optional: Alpha value passed to cv2.accumulateWeighted when averaging frames to determine the background (default: shown below)

# Higher values mean the current frame impacts the average a lot, and a new object will be averaged into the background faster.

# Low values will cause things like moving shadows to be detected as motion for longer.

# https://www.geeksforgeeks.org/background-subtraction-in-an-image-using-concept-of-running-average/

frame_alpha: 0.01

# Optional: Height of the resized motion frame (default: 100)

# Higher values will result in more granular motion detection at the expense of higher CPU usage.

# Lower values result in less CPU, but small changes may not register as motion.

frame_height: 100

# Optional: motion mask

# NOTE: see docs for more detailed info on creating masks

# mask: 0.000,0.469,1.000,0.469,1.000,1.000,0.000,1.000

# Optional: improve contrast (default: shown below)

# Enables dynamic contrast improvement. This should help improve night detections at the cost of making motion detection more sensitive

# for daytime.

improve_contrast: true

# Optional: Delay when updating camera motion through MQTT from ON -> OFF (default: shown below).

mqtt_off_delay: 30

# Optional: Object configuration

# NOTE: Can be overridden at the camera level

objects:

# Optional: list of objects to track from labelmap.txt (default: shown below)

track:

- person

- cat

# Optional: filters to reduce false positives for specific object types

filters:

person:

# Optional: minimum width*height of the bounding box for the detected object (default: 0)

min_area: 5000

# Optional: maximum width*height of the bounding box for the detected object (default: 24000000)

max_area: 100000

# Optional: minimum width/height of the bounding box for the detected object (default: 0)

min_ratio: 0.4 #0.6

# Optional: maximum width/height of the bounding box for the detected object (default: 24000000)

max_ratio: 1 #2.0

# Optional: minimum score for the object to initiate tracking (default: shown below)

min_score: 0.7 #0.5

# Optional: minimum decimal percentage for tracked object's computed score to be considered a true positive (default: shown below)

threshold: 0.7 #0.7

cat: # Voir ici : https://community.home-assistant.io/t/frigate-detecting-small-objects-birds-cats-etc/565386/10?u=milesteg

min_area: 2000 # Adjust depending on the size and distance of the object in view

max_area: 10000 # Limit larger object detections

min_score: 0.6 # Confidence score for detection

threshold: 0.7 # Higher threshold to avoid false positives

min_ratio: 0.4 # Cat's body tends to be longer than it is tall

max_ratio: 2.0 # Allow for a longer body, so the width can be up to twice the height

go2rtc:

webrtc:

candidates:

- 192.168.2.64:8555

- stun:8555

streams:

Camera_Salon_RLC_510A:

- rtsp://{FRIGATE_RTSP_REOLINK_USER}:{FRIGATE_RTSP_REOLINK_PASSWORD}@192.168.2.163:554/Preview_01_main

- ffmpeg:Camera_Salon_RLC_510A#audio=aac # <- copy of the stream which transcodes audio to the missing codec (usually will be opus)

Camera_Salon_RLC_510A_sub:

- rtsp://{FRIGATE_RTSP_REOLINK_USER}:{FRIGATE_RTSP_REOLINK_PASSWORD}@192.168.2.163:554/Preview_01_sub

- ffmpeg:Camera_Salon_RLC_510A_sub#audio=aac # <- copy of the stream which transcodes audio to the missing codec (usually will be opus)

Camera_Annke_C800_Test_1:

- rtsp://{FRIGATE_RTSP_ANNKE_USER}:{FRIGATE_RTSP_ANNKE_PASSWORD}@192.168.2.164:554/H264/ch1/main/av_stream

- ffmpeg:Camera_Annke_C800_Test_1#audio=aac # <- copy of the stream which transcodes audio to the missing codec (usually will be opus)

Camera_Annke_C800_Test_1_sub:

- rtsp://{FRIGATE_RTSP_ANNKE_USER}:{FRIGATE_RTSP_ANNKE_PASSWORD}@192.168.2.164:554/H264/ch1/sub/av_stream

- ffmpeg:Camera_Annke_C800_Test_1_sub#audio=aac # <- copy of the stream which transcodes audio to the missing codec (usually will be opus)

Camera_Annke_C800_Test_2:

- rtsp://{FRIGATE_RTSP_ANNKE_USER}:{FRIGATE_RTSP_ANNKE_PASSWORD}@192.168.2.165:554/H264/ch1/main/av_stream

- ffmpeg:Camera_Annke_C800_Test_2#audio=aac # <- copy of the stream which transcodes audio to the missing codec (usually will be opus)

Camera_Annke_C800_Test_2_sub:

- rtsp://{FRIGATE_RTSP_ANNKE_USER}:{FRIGATE_RTSP_ANNKE_PASSWORD}@192.168.2.165:554/H264/ch1/sub/av_stream

- ffmpeg:Camera_Annke_C800_Test_2_sub#audio=aac # <- copy of the stream which transcodes audio to the missing codec (usually will be opus)

cameras:

Camera_Salon_RLC_510A:

enabled: true

ffmpeg:

hwaccel_args: preset-vaapi

# hwaccel_args: preset-intel-qsv-h265

inputs:

- path: rtsp://127.0.0.1:8554/Camera_Salon_RLC_510A # <--- the name here must match the name of the camera in restream

input_args: preset-rtsp-restream

roles:

- record

- detect

- path: rtsp://127.0.0.1:8554/Camera_Salon_RLC_510A_sub # <--- the name here must match the name of the camera in restream

input_args: preset-rtsp-restream

roles: []

detect:

enabled: true # <---- disable detection until you have a working camera feed

fps: 10

width: 1280 # 640 <---- update for your camera's resolution

height: 720 # 480 <---- update for your camera's resolution

record: # <----- Enable recording

enabled: true

snapshots:

enabled: true

motion:

mask:

- 0.037,0.5,0.044,0.678,0.21,0.663,0.211,0.504

- 0,0.296,0,0,1,0,1,0.289,0.886,0.283,0.818,0.288,0.88,0.363,0.765,0.36,0.657,0.377,0.486,0.38

threshold: 30

contour_area: 10

improve_contrast: true

zones:

Salon:

coordinates:

0,0.304,0,0.619,0,1,1,1,1,0.282,0.952,0.28,0.897,0.284,0.824,0.289,0.884,0.366,0.859,0.367,0.845,0.801,0.663,0.758,0.662,0.723,0.623,0.705,0.623,0.641,0.545,0.618,0.55,0.381,0.498,0.384,0.248,0.342,0.023,0.301

inertia: 3

loitering_time: 0

Entrée:

coordinates:

0.551,0.383,0.547,0.617,0.625,0.639,0.623,0.701,0.665,0.721,0.675,0.369,0.645,0.381,0.601,0.385

loitering_time: 0

inertia: 3

Cuisine:

coordinates: 0.678,0.375,0.664,0.752,0.841,0.797,0.857,0.362,0.815,0.356,0.78,0.358

loitering_time: 0

inertia: 3

objects:

mask: 0.037,0.499,0.045,0.673,0.21,0.659,0.208,0.505

Camera_Annke_C800_Test_1:

enabled: true

ffmpeg:

hwaccel_args: preset-vaapi

# hwaccel_args: preset-intel-qsv-h265

inputs:

- path: rtsp://127.0.0.1:8554/Camera_Annke_C800_Test_1 # <--- the name here must match the name of the camera in restream

input_args: preset-rtsp-restream

roles:

- record

- path: rtsp://127.0.0.1:8554/Camera_Annke_C800_Test_1_sub # <--- the name here must match the name of the camera in restream

input_args: preset-rtsp-restream

roles:

- detect

#output_args:

# record: -f segment -segment_time 10 -segment_format mp4 -reset_timestamps 1 -strftime 1 -c:v copy -tag:v hvc1 -bsf:v hevc_mp4toannexb -c:a aac

detect:

enabled: true # <---- disable detection until you have a working camera feed

fps: 10

width: 1280 # 640 <---- update for your camera's resolution

height: 720 # 480 <---- update for your camera's resolution

record: # <----- Enable recording

enabled: true

snapshots:

enabled: true

motion:

mask:

- 0.037,0.628,0.045,0.842,0.209,0.843,0.2,0.642

- 0,0.35,0,0,1,0,1,0.279,0.893,0.289,0.889,0.343,0.923,0.398,0.765,0.415,0.714,0.421,0.716,0.438,0.58,0.456,0.493,0.408

threshold: 30

contour_area: 10

improve_contrast: true

zones:

Salon:

coordinates:

0,0.352,0,0.619,0,1,1,1,1,0.275,0.893,0.286,0.888,0.342,0.926,0.4,0.902,0.415,0.892,0.948,0.707,0.936,0.708,0.888,0.666,0.824,0.582,0.816,0.579,0.457,0.497,0.41,0.328,0.394,0.033,0.353

inertia: 3

loitering_time: 0

Entrée:

coordinates:

0.579,0.454,0.58,0.621,0.582,0.813,0.668,0.82,0.708,0.882,0.718,0.434,0.688,0.446,0.644,0.45

loitering_time: 0

inertia: 3

Cuisine:

coordinates: 0.718,0.42,0.709,0.932,0.889,0.947,0.903,0.402,0.859,0.404,0.79,0.41

loitering_time: 0

inertia: 3

objects:

mask: 0.038,0.635,0.046,0.839,0.204,0.839,0.2,0.641

Camera_Annke_C800_Test_2:

enabled: true

ffmpeg:

hwaccel_args: preset-vaapi

# hwaccel_args: preset-intel-qsv-h265

inputs:

- path: rtsp://127.0.0.1:8554/Camera_Annke_C800_Test_2 # <--- the name here must match the name of the camera in restream

input_args: preset-rtsp-restream

roles:

- record

- path: rtsp://127.0.0.1:8554/Camera_Annke_C800_Test_2_sub # <--- the name here must match the name of the camera in restream

input_args: preset-rtsp-restream

roles:

- detect

#output_args:

# record: -f segment -segment_time 10 -segment_format mp4 -reset_timestamps 1 -strftime 1 -c:v copy -tag:v hvc1 -bsf:v hevc_mp4toannexb -c:a aac

detect:

enabled: true # <---- disable detection until you have a working camera feed

fps: 10

width: 1280 # 640 <---- update for your camera's resolution

height: 720 # 480 <---- update for your camera's resolution

record: # Voir détails des entrée dans la partie commune

enabled: true

retain:

days: 2 # <- number of days to keep continuous recordings -- mettre 0 pour purger

mode: all

events:

retain:

default: 2 # <- number of days to keep event recordings -- mettre 0 pour purger

mode: motion

objects:

person: 2

cat: 2

snapshots: # Voir détails des entrée dans la partie commune

enabled: true

retain:

# Required: Default retention days (default: shown below)

default: 2

# Optional: Per object retention days

objects:

person: 2

# Optional: quality of the encoded jpeg, 0-100 (default: shown below)

quality: 100

# Optional

zones:

Fenêtre_bureau:

coordinates: 1,1,0.796,0.774,0.807,0.425,0.779,0,1,0,1,0.327,1,0.662

loitering_time: 0

inertia: 3

Arbre_à_chat:

coordinates: 0.479,0.723,0.724,0.74,0.739,0.344,0.717,0.011,0.476,0.017,0.515,0.327

loitering_time: 0

Étagère_bureau:

coordinates: 0,0.592,0.413,0.642,0.414,0.383,0,0.377

loitering_time: 0

Bureau:

coordinates: 0,1,1,1,1,0,0,0

loitering_time: 0

ui:

# Optional: Set a timezone to use in the UI (default: use browser local time)

# timezone: America/Denver

# Optional: Set the time format used.

# Options are browser, 12hour, or 24hour (default: browser)

time_format: 24hour

# Optional: Set the date style for a specified length.

# Options are: full, long, medium, short

# Examples:

# short: 2/11/23

# medium: Feb 11, 2023

# full: Saturday, February 11, 2023

# (default: short).

date_style: short

# Optional: Set the time style for a specified length.

# Options are: full, long, medium, short

# Examples:

# short: 8:14 PM

# medium: 8:15:22 PM

# full: 8:15:22 PM Mountain Standard Time

# (default: medium).

time_style: medium

# Optional: Ability to manually override the date / time styling to use strftime format

# https://www.gnu.org/software/libc/manual/html_node/Formatting-Calendar-Time.html

# possible values are shown above (default: not set)

strftime_fmt: '%d/%m/%Y/ %H:%M:%S'

# Optional: Telemetry configuration

telemetry:

# Optional: Enabled network interfaces for bandwidth stats monitoring (default: empty list, let nethogs search all)

network_interfaces: []

# Optional: Configure system stats

stats:

# Enable AMD GPU stats (default: shown below)

amd_gpu_stats: false

# Enable Intel GPU stats (default: shown below)

intel_gpu_stats: true

# Enable network bandwidth stats monitoring for camera ffmpeg processes, go2rtc, and object detectors. (default: shown below)

# NOTE: The container must either be privileged or have cap_net_admin, cap_net_raw capabilities enabled.

network_bandwidth: false

# Optional: Enable the latest version outbound check (default: shown below)

# NOTE: If you use the HomeAssistant integration, disabling this will prevent it from reporting new versions

version_check: true

version: 0.14

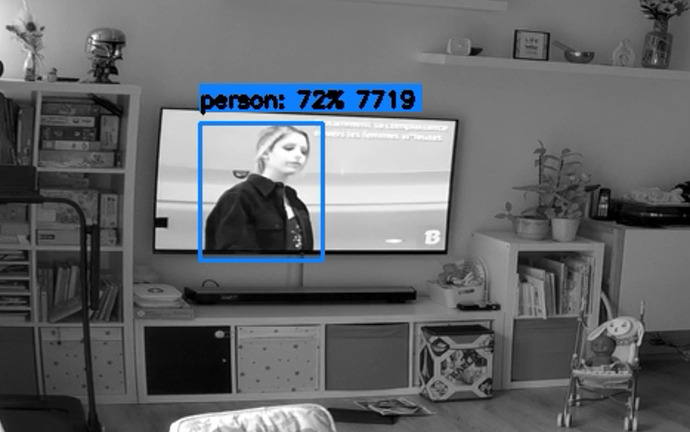

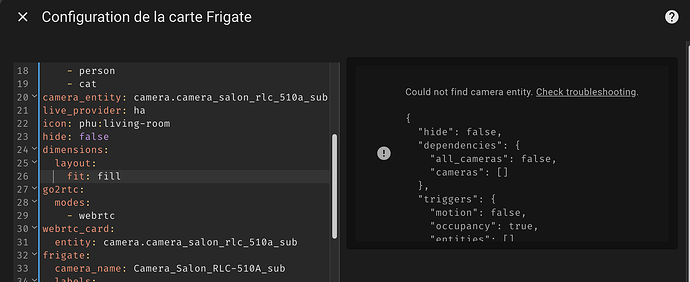

Et une image de la caméra :

Voilà voilà

Merci d’avance, et bonne soirée.