Bonjour,

Mon problème

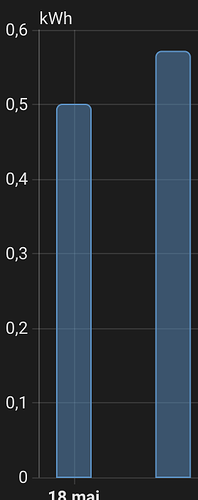

Depuis le 8 mai j’ai un « trou » dans mon dashboard energy entre 1h et 2h que je n’arrive pas à m’expliquer. Mes backups proxmox se font à 5h du matin, mes sauvegardes HA 6h40.

j’ai ça dans les logs à 2h :

2025-05-17 02:00:10.289 WARNING (Recorder) [homeassistant.components.recorder.util] Blocked attempt to insert duplicated statistic rows, please report at https://github.com/home-assistant/core/issues?q=is%3Aopen+is%3Aissue+label%3A%22integration%3A+recorder%22

j’ai cherché sur GitHub mais je n’ai rien trouvé à part une demande similaire ou il n’y a pas eu de réponses, ça n’a peut-être rien à voir avec mon problème

Est ce que d’autres ont le même phénoméne ?

Merci

Logs entre 1h et 2h

2025-05-17 01:33:51.965 ERROR (MainThread) [kasa.smart.smartdevice] Error querying 192.168.50.86 for modules 'Time, AutoOff, DeviceModule' after first update: ('Unable to query the device: 192.168.50.86: ', TimeoutError()) 2025-05-17 01:33:57.964 ERROR (MainThread) [kasa.smart.smartdevice] Error querying 192.168.50.86 individually for module query 'get_device_time' after first update: ('Unable to query the device: 192.168.50.86: ', TimeoutError()) 2025-05-17 01:34:03.963 ERROR (MainThread) [kasa.smart.smartdevice] Error querying 192.168.50.86 individually for module query 'get_auto_off_config' after first update: ('Unable to query the device: 192.168.50.86: ', TimeoutError()) 2025-05-17 01:34:07.051 WARNING (MainThread) [kasa.smart.smartdevice] Error processing Time for device 192.168.50.86, module will be unavailable: get_device_time for Time (error_code=INTERNAL_QUERY_ERROR) 2025-05-17 01:34:07.051 WARNING (MainThread) [kasa.smart.smartdevice] Error processing AutoOff for device 192.168.50.86, module will be unavailable: get_auto_off_config for AutoOff (error_code=INTERNAL_QUERY_ERROR) 2025-05-17 01:34:07.052 WARNING (MainThread) [homeassistant.components.tplink.entity] Unable to read data for <DeviceType.Plug at 192.168.50.86 - C6N-Plug (P100)> number.imprimante_plug_tapo_eteignez_dans: get_auto_off_config for AutoOff (error_code=INTERNAL_QUERY_ERROR) 2025-05-17 01:34:07.052 WARNING (MainThread) [homeassistant.components.tplink.entity] Unable to read data for <DeviceType.Plug at 192.168.50.86 - C6N-Plug (P100)> switch.imprimante_plug_tapo_arret_automatique_active: get_auto_off_config for AutoOff (error_code=INTERNAL_QUERY_ERROR) 2025-05-17 01:36:47.964 ERROR (MainThread) [kasa.smart.smartdevice] Error querying 192.168.50.86 for modules 'Time, AutoOff, DeviceModule' after first update: ('Unable to query the device: 192.168.50.86: ', TimeoutError()) 2025-05-17 02:00:10.284 ERROR (Recorder) [homeassistant.helpers.recorder] Error executing query Traceback (most recent call last): File "/usr/local/lib/python3.13/site-packages/sqlalchemy/engine/base.py", line 2115, in _exec_insertmany_context dialect.do_execute( ~~~~~~~~~~~~~~~~~~^ cursor, ^^^^^^^ ...<2 lines>... context, ^^^^^^^^ ) ^ File "/usr/local/lib/python3.13/site-packages/sqlalchemy/engine/default.py", line 945, in do_execute cursor.execute(statement, parameters) ~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^^^^^ sqlite3.IntegrityError: UNIQUE constraint failed: statistics.metadata_id, statistics.start_ts The above exception was the direct cause of the following exception: Traceback (most recent call last): File "/usr/src/homeassistant/homeassistant/helpers/recorder.py", line 96, in session_scope yield session File "/usr/src/homeassistant/homeassistant/components/recorder/statistics.py", line 611, in compile_statistics modified_statistic_ids = _compile_statistics( instance, session, start, fire_events ) File "/usr/src/homeassistant/homeassistant/components/recorder/statistics.py", line 718, in _compile_statistics session.flush() # populate the ids of the new StatisticsShortTerm rows ~~~~~~~~~~~~~^^ File "/usr/local/lib/python3.13/site-packages/sqlalchemy/orm/session.py", line 4353, in flush self._flush(objects) ~~~~~~~~~~~^^^^^^^^^ File "/usr/local/lib/python3.13/site-packages/sqlalchemy/orm/session.py", line 4488, in _flush with util.safe_reraise(): ~~~~~~~~~~~~~~~~~^^ File "/usr/local/lib/python3.13/site-packages/sqlalchemy/util/langhelpers.py", line 146, in __exit__ raise exc_value.with_traceback(exc_tb) File "/usr/local/lib/python3.13/site-packages/sqlalchemy/orm/session.py", line 4449, in _flush flush_context.execute() ~~~~~~~~~~~~~~~~~~~~~^^ File "/usr/local/lib/python3.13/site-packages/sqlalchemy/orm/unitofwork.py", line 466, in execute rec.execute(self) ~~~~~~~~~~~^^^^^^ File "/usr/local/lib/python3.13/site-packages/sqlalchemy/orm/unitofwork.py", line 642, in execute util.preloaded.orm_persistence.save_obj( ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~^ self.mapper, ^^^^^^^^^^^^ uow.states_for_mapper_hierarchy(self.mapper, False, False), ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^ uow, ^^^^ ) ^ File "/usr/local/lib/python3.13/site-packages/sqlalchemy/orm/persistence.py", line 93, in save_obj _emit_insert_statements( ~~~~~~~~~~~~~~~~~~~~~~~^ base_mapper, ^^^^^^^^^^^^ ...<3 lines>... insert, ^^^^^^^ ) ^ File "/usr/local/lib/python3.13/site-packages/sqlalchemy/orm/persistence.py", line 1143, in _emit_insert_statements result = connection.execute( statement, multiparams, execution_options=execution_options ) File "/usr/local/lib/python3.13/site-packages/sqlalchemy/engine/base.py", line 1416, in execute return meth( self, distilled_parameters, execution_options or NO_OPTIONS, ) File "/usr/local/lib/python3.13/site-packages/sqlalchemy/sql/elements.py", line 523, in _execute_on_connection return connection._execute_clauseelement( ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~^ self, distilled_params, execution_options ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^ ) ^ File "/usr/local/lib/python3.13/site-packages/sqlalchemy/engine/base.py", line 1638, in _execute_clauseelement ret = self._execute_context( dialect, ...<8 lines>... cache_hit=cache_hit, ) File "/usr/local/lib/python3.13/site-packages/sqlalchemy/engine/base.py", line 1841, in _execute_context return self._exec_insertmany_context(dialect, context) ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^ File "/usr/local/lib/python3.13/site-packages/sqlalchemy/engine/base.py", line 2123, in _exec_insertmany_context self._handle_dbapi_exception( ~~~~~~~~~~~~~~~~~~~~~~~~~~~~^ e, ^^ ...<4 lines>... is_sub_exec=True, ^^^^^^^^^^^^^^^^^ ) ^ File "/usr/local/lib/python3.13/site-packages/sqlalchemy/engine/base.py", line 2352, in _handle_dbapi_exception raise sqlalchemy_exception.with_traceback(exc_info[2]) from e File "/usr/local/lib/python3.13/site-packages/sqlalchemy/engine/base.py", line 2115, in _exec_insertmany_context dialect.do_execute( ~~~~~~~~~~~~~~~~~~^ cursor, ^^^^^^^ ...<2 lines>... context, ^^^^^^^^ ) ^ File "/usr/local/lib/python3.13/site-packages/sqlalchemy/engine/default.py", line 945, in do_execute cursor.execute(statement, parameters) ~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^^^^^ sqlalchemy.exc.IntegrityError: (sqlite3.IntegrityError) UNIQUE constraint failed: statistics.metadata_id, statistics.start_ts [SQL: INSERT INTO statistics (created, created_ts, metadata_id, start, start_ts, mean, mean_weight, min, max, last_reset, last_reset_ts, state, sum) VALUES (?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?) RETURNING id] [parameters: (None, 1747440010.2627625, 913, None, 1747436400.0, None, None, None, None, None, None, 7.05, 4.63)] (Background on this error at: https://sqlalche.me/e/20/gkpj) 2025-05-17 02:00:10.289 WARNING (Recorder) [homeassistant.components.recorder.util] Blocked attempt to insert duplicated statistic rows, please report at https://github.com/home-assistant/core/issues?q=is%3Aopen+is%3Aissue+label%3A%22integration%3A+recorder%22 Traceback (most recent call last): File "/usr/local/lib/python3.13/site-packages/sqlalchemy/engine/base.py", line 2115, in _exec_insertmany_context dialect.do_execute( ~~~~~~~~~~~~~~~~~~^ cursor, ^^^^^^^ ...<2 lines>... context, ^^^^^^^^ ) ^ File "/usr/local/lib/python3.13/site-packages/sqlalchemy/engine/default.py", line 945, in do_execute cursor.execute(statement, parameters) ~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^^^^^ sqlite3.IntegrityError: UNIQUE constraint failed: statistics.metadata_id, statistics.start_ts The above exception was the direct cause of the following exception: Traceback (most recent call last): File "/usr/src/homeassistant/homeassistant/helpers/recorder.py", line 96, in session_scope yield session File "/usr/src/homeassistant/homeassistant/components/recorder/statistics.py", line 611, in compile_statistics modified_statistic_ids = _compile_statistics( instance, session, start, fire_events ) File "/usr/src/homeassistant/homeassistant/components/recorder/statistics.py", line 718, in _compile_statistics session.flush() # populate the ids of the new StatisticsShortTerm rows ~~~~~~~~~~~~~^^ File "/usr/local/lib/python3.13/site-packages/sqlalchemy/orm/session.py", line 4353, in flush self._flush(objects) ~~~~~~~~~~~^^^^^^^^^ File "/usr/local/lib/python3.13/site-packages/sqlalchemy/orm/session.py", line 4488, in _flush with util.safe_reraise(): ~~~~~~~~~~~~~~~~~^^ File "/usr/local/lib/python3.13/site-packages/sqlalchemy/util/langhelpers.py", line 146, in __exit__ raise exc_value.with_traceback(exc_tb) File "/usr/local/lib/python3.13/site-packages/sqlalchemy/orm/session.py", line 4449, in _flush flush_context.execute() ~~~~~~~~~~~~~~~~~~~~~^^ File "/usr/local/lib/python3.13/site-packages/sqlalchemy/orm/unitofwork.py", line 466, in execute rec.execute(self) ~~~~~~~~~~~^^^^^^ File "/usr/local/lib/python3.13/site-packages/sqlalchemy/orm/unitofwork.py", line 642, in execute util.preloaded.orm_persistence.save_obj( ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~^ self.mapper, ^^^^^^^^^^^^ uow.states_for_mapper_hierarchy(self.mapper, False, False), ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^ uow, ^^^^ ) ^ File "/usr/local/lib/python3.13/site-packages/sqlalchemy/orm/persistence.py", line 93, in save_obj _emit_insert_statements( ~~~~~~~~~~~~~~~~~~~~~~~^ base_mapper, ^^^^^^^^^^^^ ...<3 lines>... insert, ^^^^^^^ ) ^ File "/usr/local/lib/python3.13/site-packages/sqlalchemy/orm/persistence.py", line 1143, in _emit_insert_statements result = connection.execute( statement, multiparams, execution_options=execution_options ) File "/usr/local/lib/python3.13/site-packages/sqlalchemy/engine/base.py", line 1416, in execute return meth( self, distilled_parameters, execution_options or NO_OPTIONS, ) File "/usr/local/lib/python3.13/site-packages/sqlalchemy/sql/elements.py", line 523, in _execute_on_connection return connection._execute_clauseelement( ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~^ self, distilled_params, execution_options ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^ ) ^ File "/usr/local/lib/python3.13/site-packages/sqlalchemy/engine/base.py", line 1638, in _execute_clauseelement ret = self._execute_context( dialect, ...<8 lines>... cache_hit=cache_hit, ) File "/usr/local/lib/python3.13/site-packages/sqlalchemy/engine/base.py", line 1841, in _execute_context return self._exec_insertmany_context(dialect, context) ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^ File "/usr/local/lib/python3.13/site-packages/sqlalchemy/engine/base.py", line 2123, in _exec_insertmany_context self._handle_dbapi_exception( ~~~~~~~~~~~~~~~~~~~~~~~~~~~~^ e, ^^ ...<4 lines>... is_sub_exec=True, ^^^^^^^^^^^^^^^^^ ) ^ File "/usr/local/lib/python3.13/site-packages/sqlalchemy/engine/base.py", line 2352, in _handle_dbapi_exception raise sqlalchemy_exception.with_traceback(exc_info[2]) from e File "/usr/local/lib/python3.13/site-packages/sqlalchemy/engine/base.py", line 2115, in _exec_insertmany_context dialect.do_execute( ~~~~~~~~~~~~~~~~~~^ cursor, ^^^^^^^ ...<2 lines>... context, ^^^^^^^^ ) ^ File "/usr/local/lib/python3.13/site-packages/sqlalchemy/engine/default.py", line 945, in do_execute cursor.execute(statement, parameters) ~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^^^^^ sqlalchemy.exc.IntegrityError: (sqlite3.IntegrityError) UNIQUE constraint failed: statistics.metadata_id, statistics.start_ts [SQL: INSERT INTO statistics (created, created_ts, metadata_id, start, start_ts, mean, mean_weight, min, max, last_reset, last_reset_ts, state, sum) VALUES (?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?) RETURNING id] [parameters: (None, 1747440010.2627625, 913, None, 1747436400.0, None, None, None, None, None, None, 7.05, 4.63)] (Background on this error at: https://sqlalche.me/e/20/gkpj) 2025-05-17 02:13:30.381 ERROR (MainThread) [homeassistant] Error doing job: Task exception was never retrieved (None) Traceback (most recent call last):

Ma configuration

[center]## System Information

| version | core-2025.5.2 |

|---|---|

| installation_type | Home Assistant OS |

| dev | false |

| hassio | true |

| docker | true |

| user | root |

| virtualenv | false |

| python_version | 3.13.3 |

| os_name | Linux |

| os_version | 6.12.23-haos |

| arch | x86_64 |

| timezone | Europe/Paris |

| config_dir | /config |

Home Assistant Community Store

| GitHub API | ok |

|---|---|

| GitHub Content | ok |

| GitHub Web | ok |

| HACS Data | ok |

| GitHub API Calls Remaining | 4987 |

| Installed Version | 2.0.5 |

| Stage | running |

| Available Repositories | 1828 |

| Downloaded Repositories | 23 |

Home Assistant Cloud

| logged_in | false |

|---|---|

| can_reach_cert_server | ok |

| can_reach_cloud_auth | ok |

| can_reach_cloud | ok |

Home Assistant Supervisor

| host_os | Home Assistant OS 15.2 |

|---|---|

| update_channel | stable |

| supervisor_version | supervisor-2025.05.1 |

| agent_version | 1.7.2 |

| docker_version | 28.0.4 |

| disk_total | 30.8 GB |

| disk_used | 11.0 GB |

| healthy | true |

| supported | true |

| host_connectivity | true |

| supervisor_connectivity | true |

| ntp_synchronized | true |

| virtualization | kvm |

| board | ova |

| supervisor_api | ok |

| version_api | ok |

| installed_addons | Matter Server (8.0.0), Zigbee2MQTT (2.3.0-1), Ring-MQTT with Video Streaming (5.8.1), File editor (5.8.0), motionEye (0.22.1), ESPHome Device Builder (2025.4.2), Mosquitto broker (6.5.1), Network UPS Tools (0.14.1), Z-Wave JS UI (4.3.0), Terminal & SSH (9.17.0), Neolink-latest (0.0.2) |

Dashboards

| dashboards | 2 |

|---|---|

| resources | 15 |

| views | 16 |

| mode | storage |

Network Configuration

| adapters | lo (disabled), enp6s18 (enabled, default, auto), docker0 (disabled), hassio (disabled), vethb7087a1 (disabled), veth328bd04 (disabled), vethfb4b368 (disabled), vethaa5771b (disabled), veth65dc774 (disabled), veth4f85a08 (disabled), veth7e8aee6 (disabled), vethc57541a (disabled), veth7544032 (disabled), vethec8e801 (disabled), vethb42871e (disabled), veth5413866 (disabled) |

|---|---|

| ipv4_addresses | lo (127.0.0.1/8), enp6s18 (192.168.50.247/24), docker0 (172.30.232.1/23), hassio (172.30.32.1/23), vethb7087a1 (), veth328bd04 (), vethfb4b368 (), vethaa5771b (), veth65dc774 (), veth4f85a08 (), veth7e8aee6 (), vethc57541a (), veth7544032 (), vethec8e801 (), vethb42871e (), veth5413866 () |

| ipv6_addresses | lo (::1/128), enp6s18 (fe80::cf9c:f6ba:c12c:ace7/64), docker0 (fe80::60a6:92ff:fe9b:c39/64), hassio (fe80::5c90:23ff:fe4a:e30b/64), vethb7087a1 (fe80::a4db:6fff:fe90:962/64), veth328bd04 (fe80::68e4:62ff:fecd:8549/64), vethfb4b368 (fe80::84a4:c4ff:fe71:e283/64), vethaa5771b (fe80::7832:c7ff:fe98:2432/64), veth65dc774 (fe80::64c2:3bff:fe42:b60/64), veth4f85a08 (fe80::f4bd:47ff:fecb:72b2/64), veth7e8aee6 (fe80::8c41:59ff:fe1f:70f6/64), vethc57541a (fe80::f01c:7fff:fead:f626/64), veth7544032 (fe80::b42b:67ff:fe74:551e/64), vethec8e801 (fe80::a850:30ff:fed1:770c/64), vethb42871e (fe80::5c4a:6eff:fe49:b7f7/64), veth5413866 (fe80::cf1:e1ff:fe1e:95d/64) |

| announce_addresses | 192.168.50.247, fe80::cf9c:f6ba:c12c:ace7 |

Recorder

| oldest_recorder_run | 6 mai 2025 à 11:02 |

|---|---|

| current_recorder_run | 18 mai 2025 à 09:49 |

| estimated_db_size | 379.15 MiB |

| database_engine | sqlite |

| database_version | 3.48.0 |